Machine learning (ML) is great for augmenting human intelligence, but academic and industry researchers have been debating the severity of its carbon footprint. We’re still learning about the magnitude of machine learning’s effects on the environment, but there are solutions available to help companies choose the most eco-friendly options for managing and distributing workloads.

Read more: What are the Types of Machine Learning?

The ML carbon footprint

Machine learning is becoming indispensable while also drawing more scrutiny for its environmental effects.

Today’s businesses reap many benefits from machine learning such as automation, complex problem solving, and real-time data insights. However, ML uses a lot of energy at the same time that it emits carbon, potentially accelerating climate change. Widely cited research claims that a transformer undertaking neural architecture search emits up to 626,000 pounds of carbon dioxide. More carbon dioxide in the atmosphere means a rise in average global temperatures that disrupt ecosystems and cause erratic weather.

Types of ML environmental effects and how they’re measured

Machine learning impacts the environment in two main ways, namely through the amount of energy it consumes and the carbon it emits.

Energy use

Carbon emissions are not the only environmental effect of ML. The amount of data that gets stored for effective ML—2.5 quintillion bytes of data every day—means that the hardware behind ML requires a lot of electricity.

Energy consumption is measured in megawatt hours (MWh). One MWh is equivalent to 1,000,000 Watts of electricity used over the course of one hour. 1 million MWh equal one terawatt-hour (TWh).

In 2020 the US data center industry consumed upwards of 400 terawatt hours, accounting for one to two percent of global data center energy use. Without efforts to use renewable energy, overall data center energy consumption is projected to grow between three and 13 percent by 2030.

A data center’s level of energy efficiency is measured by Power Usage Effectiveness (PUE), the common industry metric by which to compare data centers. PUE indicates how much of a data center’s total energy use is devoted to its hardware. A “perfect score” of 1.0 would mean that the data center operates at maximum efficiency, so the lower the score, the better.

In 2020 the industry PUE average for data centers was 1.58, though the average data center may have a PUE as high as 3.0. The acceptable industry standard for data centers is between 1.5 and 1.8. Cloud providers have an average PUE of only 1.10. This at least partially explains the widespread adoption of the cloud over the traditional data center.

Carbon emission

Carbon emissions from ML fall into two main categories: lifecycle emissions and operational emissions.

Lifecycle emissions broadly encompass carbon emitted while manufacturing the components needed for machine learning, from chips to physical data centers.

Operational emissions are attributed more narrowly to energy expended to operate ML hardware, including the electricity and cooling that it needs.

Focusing on the more limited scope of operational emissions, this type of emission is measured in metric tons. Each metric ton equates to 2,205 pounds. In addition, a data center’s electricity use is measured by its carbon intensity, meaning the extent of carbon emitted to make one unit of electricity.

Estimates of how much carbon machine learning emits range from 0.03 pounds per hour to two pounds per hour.

Conflicting views on the severity of ML carbon emissions

So how bad is the carbon ML footprint really? There are generally two opposing camps.

Viewpoint 1: ML carbon emissions are alarmingly high

Broad consensus relies on an oft-cited academic study from researchers at University of Massachusetts Amherst. In the 2019 UMass study, researchers estimate that carbon emissions from training NLP models are six times higher than that of the average American car over its “lifetime” of 120,000 miles.

Viewpoint 2: ML carbon emissions are not that bad

A more recent Google-backed study disputes the UMass findings. In this April 2022 study, researchers from Google and University of California Berkeley claim that the UMass study overestimates the amount of carbon emissions.

The authors attribute this overestimation to:

- Basing insights on average data center PUE and average carbon intensity instead of “real numbers for a Google data center”

- Deriving conclusions from data based on older graphics processing units (GPUs) not optimized for ML

- Miscalculating the computational cost of neural architecture search (NAS)

Google and UC Berkeley researchers claim that:

- The percentage of energy consumption devoted to ML remains the same compared to overall increasing energy consumption. This suggests that ML is not a major contributor to increased energy use

- Data centers’ capacity, on the whole, are increasing at a higher rate than their energy consumption, signaling increasing data center efficiency

- Since NAS identifies the most efficient ML model, it “likely…reduces total ML energy usage” compared to when not utilizing NAS

- ML technology is improving to such a degree that the amount of energy used and carbon emitted is decreasing

The magnitude of energy consumption and carbon emissions seems to be up for debate between academics and industry researchers. Yet, both camps agree that measures that reduce energy consumption and carbon emissions are worthwhile to work towards more sustainable ML.

8 strategies for reducing ML’s carbon footprint

Fortunately, researchers and tech companies alike have come up with strategies and software solutions to address ML’s carbon footprint:

- Transparency

- Model reuse

- Model selection

- Hardware use

- Cloud deployment

- Location selection

- Smart orchestration

- Automated tracking

Transparency and accountability

Researchers from the Google-UC Berkeley study argue for more transparency in the industry. That is, tech companies should publish their energy consumption and carbon emission data for transparency and accountability and to foster competition towards more eco-friendly ML.

Reuse and reduce

When training an ML model, there’s no need to reinvent the wheel by training from scratch. Doing so only requires more power from the central processing unit (CPU) or GPU. Azure offers pre-trained models for vision, speech, language, and search that use less energy and reduce the carbon footprint.

The four Ms

Google and Berkeley researchers also propose ways to “reduce energy by up to 100x and reduce carbon footprint by up to 1000x.”

- Model. One way to reduce the carbon footprint is to select the best model. Functions like NAS help with finding the most efficient ML model architectures without sacrificing the quality and accuracy of ML.

- Machine. Tensor processing units (TPUs) and GPUs are optimized for ML training, unlike general-purpose processors. Using TPUs and GPUs result in two to five times less energy consumption and, by extension, a smaller carbon footprint.

- Mechanization. Migrating from on-premise data centers to cloud computing reduces energy use by up to half, according to the 2022 study from Google and Berkeley.

- Map. It turns out location matters. A further benefit of cloud computing is that ML engineers can run their ML workloads in the most energy efficient locations. This cuts the carbon footprint up to ten times. The Google-Berkeley study pointed out, for instance, that data centers in Oklahoma and Iowa received among the highest carbon-free energy scores.

Software that enables eco-friendly ML

Some software solutions enable ML practitioners to distribute workloads in a way that uses less power and emits less carbon.

For example, Valohai is an MLOps platform that acts as the intermediary between data scientists and the hardware on which they run their ML workloads. It enables companies to run ML workloads more efficiently in two key ways: smart orchestration and automatic tracking.

Read more: AIOps vs MLOps: What’s the Difference?

Smart orchestration

When a data scientist wants to train a model, they simply select their code, data, and where they want to run the model. Valohai spins up the cloud instances that are needed and automatically shuts them down when the workload is complete. This way, the cloud doesn’t continue running and using energy until a data scientist manually turns it off, which they sometimes forget to do.

Aside from cloud location, Valohai also allows users to find the most optimal infrastructure for each task. Data scientists are able to mix and match various cloud providers and on-premise data centers to arrive at the most efficient option to run an ML workload.

With Valohai, data scientists can not only build complex ML pipelines but also schedule them to run at certain intervals. Automated scheduling to run ML workloads is thus another feature that cuts down on energy use and reduces the carbon footprint.

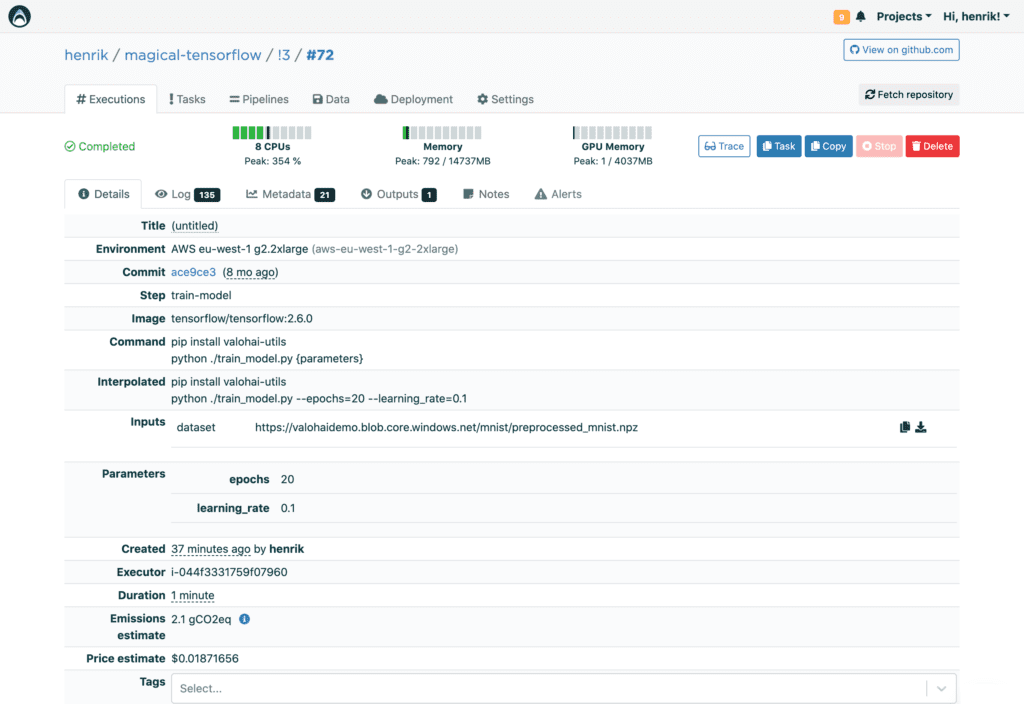

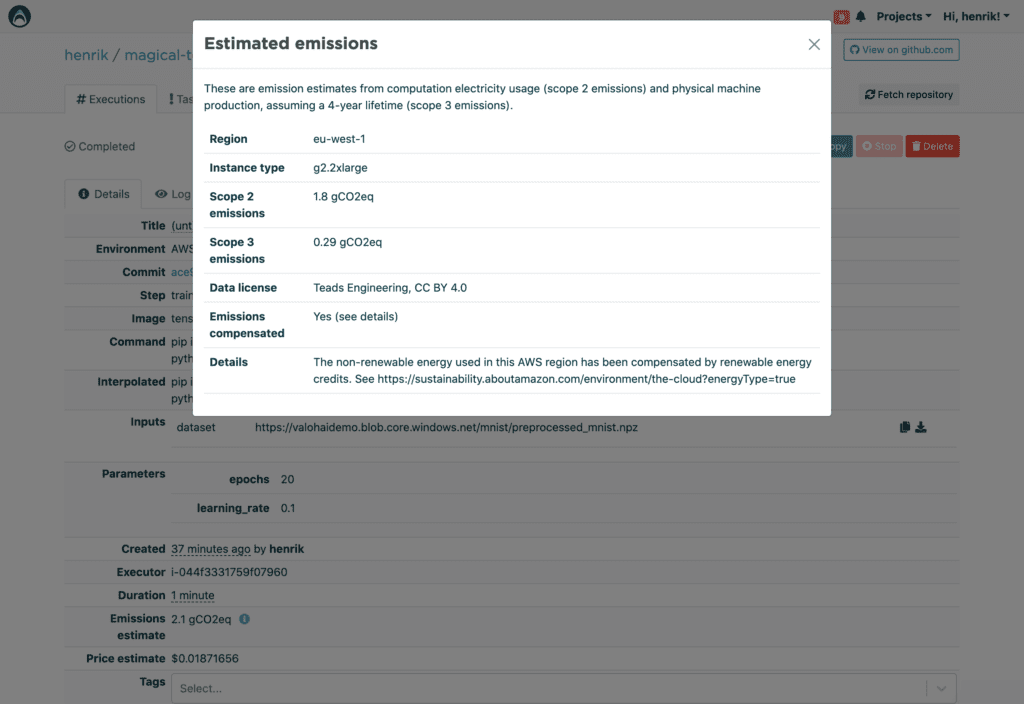

Automatic tracking

After selecting the location and type of infrastructure for an ML task, Valohai’s platform consolidates the metrics and automatically tracks them. These metrics include:

- Which machines were used

- How long they were used

- How much it cost to run them

In addition, Valohai has recently added carbon footprint estimations on top of these metrics. So far this is only available for AWS.

Valohai is not a carbon emission reduction tool. However, as a cloud-agnostic platform, Valohai provides a fuller context through its smart orchestration and automatic tracking features. With this information, data scientists and ML practitioners are empowered to make better choices about how they train and deploy ML models.

Towards a more sustainable future for ML

Machine learning is a way that computers help humans make smarter decisions. It helps companies leverage information to obtain impactful insights without manual data analysis.

Left unchecked, however, ML comes at an environmental cost. The extent of that cost is unclear, but researchers and industry alike acknowledge the energy use and carbon footprint of ML and are actively seeking ways to reduce both.

Today’s companies can conduct greener ML by employing the above strategies and using software solutions that include eco-enablement features.

Read next: Best MLOps Tools & Platforms