Why Data Pros Have a Need for Speed

Why Data Pros Have a Need for Speed

Why Data Pros Have a Need for Speed

Tech tools that enable near real-time data pipelines are needed in order to keep up with a digital world that’s moving faster than ever.

Immediate Access

Immediate Access

65% of survey respondents said their organization has near real-time data pipelines already in production, and 24% said they plan to have this before the end of this year.

Five-Minute Rule

Five-Minute Rule

60% define real-time streaming as the processing of data within or under five minutes of ingesting the data.

Impactful Outcomes

Impactful Outcomes

71% said they need to leverage “fast data/big data” to power core customer-facing apps, and 68% said this is required to provide analytics to optimize internal business processes.

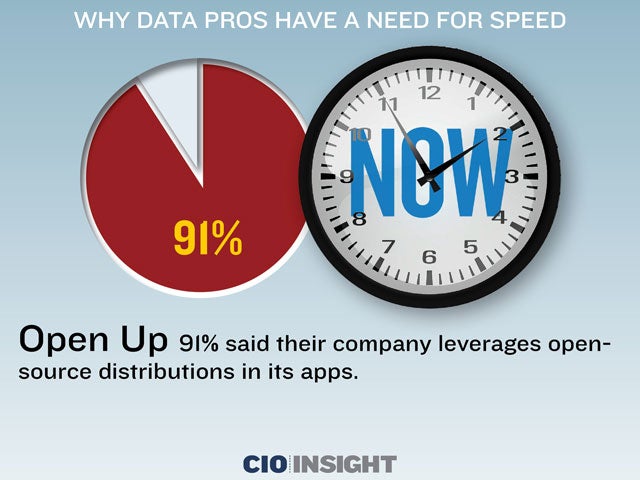

Open Up

Open Up

91% said their company leverages open-source distributions in its apps.

Location, Location, Location

Location, Location, Location

40% host their data pipeline in the cloud and 32% host this on-premise, while 28% do so both in the cloud and on-premise.

Information Stream

Information Stream

92% said their organization plans to increase its investment in steaming data in the next year.

Scaling Back

Scaling Back

79% said their company plans to reduce or eliminate investment in batch-only processing.

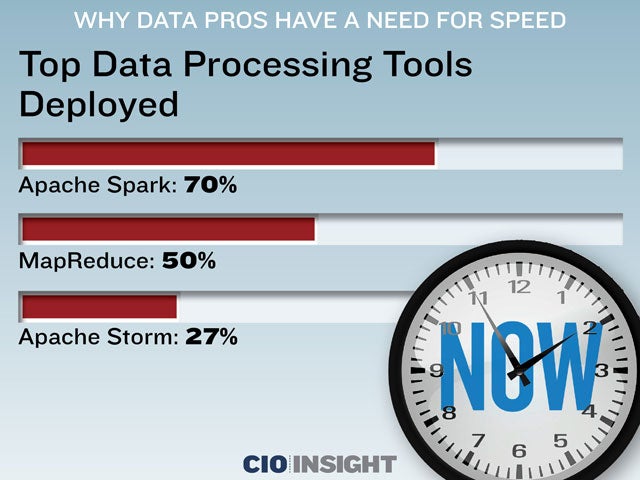

Top Data Processing Tools Deployed

Top Data Processing Tools Deployed

Apache Spark: 70%, MapReduce: 50%, Apache Storm: 27%

Top Data Sink Tools

Top Data Sink Tools

HDFS: 54%, Cassandra: 42%, Elasticsearch: 38%

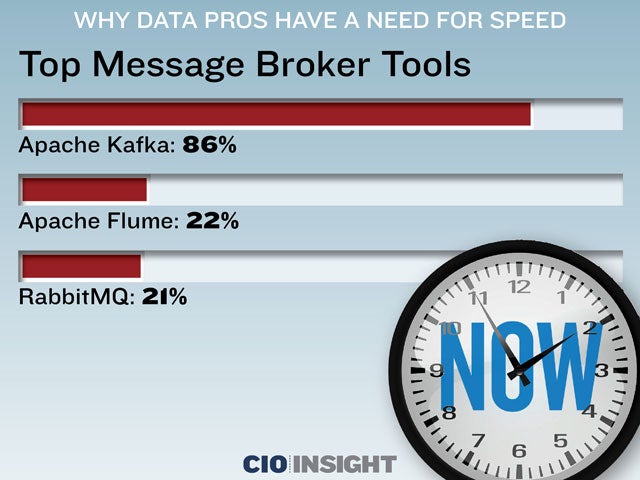

Top Message Broker Tools

Top Message Broker Tools

Apache Kafka: 86%, Apache Flume: 22%, RabbitMQ: 21%

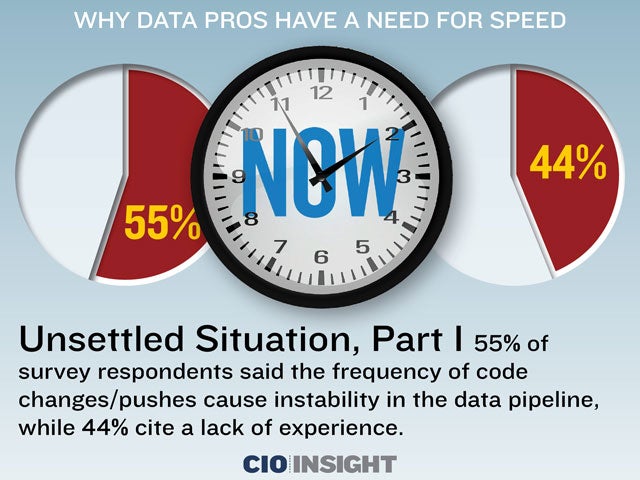

Unsettled Situation, Part I

Unsettled Situation, Part I

55% of survey respondents said the frequency of code changes/pushes cause instability in the data pipeline, while 44% cite a lack of experience.

Unsettled Situation, Part II

Unsettled Situation, Part II

38% said an inadequate capacity to handle surges creates instability in the data pipeline, while 30% said constant version updates do.